Introduction

In this blog, we’ll go through a basic introduction to LangChain, an open-source framework designed to facilitate the development of applications powered by language models. Langchain empowers developers to leverage the capabilities of language models by providing tools for data awareness and agentic behaviour, enabling these models to interact with their environment and effectively solve complex problems.

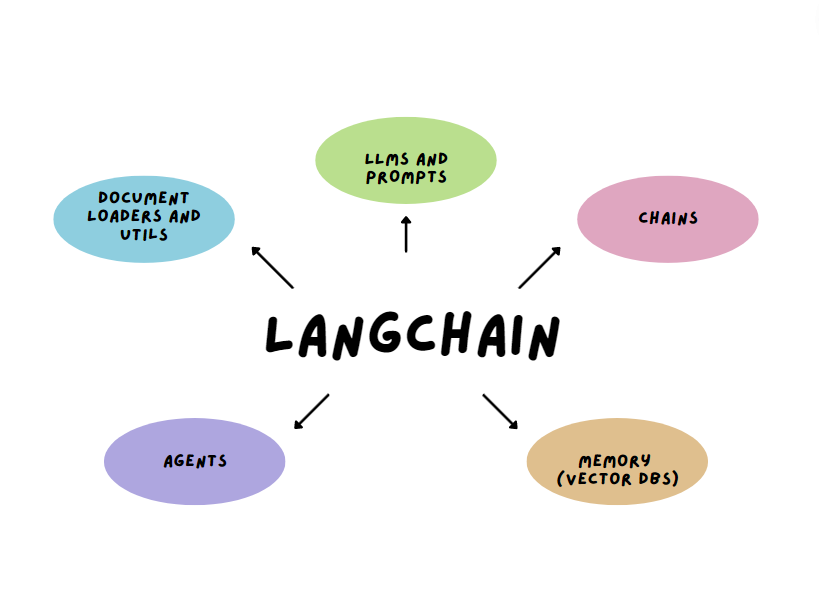

At the core of Langchain lies the concept of chaining different components together to tackle complex problems using LLMs. These chains can consist of multiple components sourced from various modules within Langchain. By leveraging this flexible and modular approach, developers can efficiently address intricate challenges and create innovative solutions by combining the strengths of LLMs with the capabilities provided by Langchain.

With Langchain & LLMs, developers can effortlessly chain together various components, effectively combining the strengths of different language models. The framework offers modules such as models, prompts, memory, indexes, chains, agents, and callbacks, enabling developers to enhance the capabilities and behaviour of their language models.

Throughout this blog, we try to provide a basic overview of the key modules offered by Langchain, including models for connecting various language models, prompts for guiding the model’s output, memory for persisting context, indexes for interacting with external data, chains for solving complex tasks, agents for decision-making and action execution.

Why LangChain became so popular?

Before starting with LangChain let’s see how it became so popular in a short time.

The debut of ChatGPT in November 2022 paved the way for a paradigm shift in the realm of generative AI, democratising its accessibility. This groundbreaking achievement served as a catalyst for the subsequent unveiling of Large Language Models (LLMs) with manifold applications. Based on OpenAI’s GPT3.5 LLM, ChatGPT played a pivotal role in the popularity of generative AI and ushered in a new era of boundless possibilities.

Large Language Models (LLMs) GPT3.5 have revolutionised the field of natural language processing (NLP) by enabling machines to understand and generate human-like text. These powerful AI models have been extensively trained on vast amounts of data, allowing them to capture intricate patterns and nuances of language. LLMs excel in various NLP tasks such as machine translation, text summarization, sentiment analysis, and more. They have proven instrumental in enhancing human-computer interactions, powering virtual assistants, chatbots, and other conversational agents.

With LLMs becoming popular Harrison Chase started LangChain as an open source project in October 2022. Soon, with the launch of ChatGPT & subsequent popularity of LLMs interest rose in LLM-powered applications to solve complex problems. LangChain provided easy & efficient integrations with most LLMs and it also provided add-on functionalities to empower applications further. This led to a meteoric rise in the popularity of LangChain for LLM-powered applications.

Let’s get started with the LangChain modules. First, we’ll get a brief overview of LangChain’s structure & then delve a bit deeper into each of its modules.

LangChain Modules

The framework is organised in modules each serving a distinct purpose in managing various aspects of interactions with Large Language Models (LLMs). These modules provide developers with a structured approach to handle different facets of LLM interactions, allowing for efficient management and control over the behaviour and capabilities of the models. By utilising the specific functionalities offered by each module, developers can effectively tailor and customise their LLM interactions based on their application requirements, enhancing the overall performance and usability of their projects.

- Models – Langchain provides a standard interface to connect different models like LLMs, Chat-Models(like ChatGPT) or other text embedding models.

- Prompts – Prompts Module provides standard PromptTemplates for LLMs, ChatModels, etc. Some commonly used PromptTemplates are available to use as-is. This empowers in constructing complex prompts & makes prompts more reusable, enabling better prompt management & optimization. Output Parsers are also available to parse LLM outputs to any format we want.

- Memory – Langchain provides the ability to persist states between multiple interactions with any LLM/ChatModel/Chain/Agent stateful. Memory refers to this persistent state or the ability to preserve context from previous & current calls to subsequent calls made to used models/LLMs. This is very useful when creating chat-like applications.

- Indexes – To make our LLMs interact with data we need to provide interfaces and integrations for loading/querying/updating external data. Langchain provides multiple ways to do this such as Text-Splitters, Document-Loaders, VectorStores, and Retrievers.

- Chains – Chains are the key building block of Langchain. Chains enable combining LLMs & Prompts to solve tasks. Multiple Chains can be combined to perform a series of tasks to solve complex problems. Langchain provides many chains to use out-of-the-box like SQL chain, LLM Math chain, Sequential Chain, Router Chain, etc.

- Agents – Agent refers to an LLM/Model making a decision on actions to take, executing that action & then observing the outcomes, it repeats this until it is able to complete its objective. Agents enable LLMs to use tools like search engines, APIs, etc. LangChain has a lot of built-in tools like Wikipedia, Twilio, Zapier, Python REPL, etc.

- Callbacks – Callbacks modules empower users to monitor, log, trace, and stream the output of LLM applications. Callbacks can be called independently, deeply nested or scoped to specific requests. Callbacks can be placed at any stage of the application.

Let’s go into a little more detail about the different modules and also explore how we can use these modules while building with LLMs.

Types of Models in LangChain

Langchain provides standard interfaces for using three different types of language models namely

LLMs

Large Language Models Machine learning models are capable of performing natural language tasks like text generation, summarization, text classification, translation, etc. These models take text input & provide output also as text. Langchain provides a standard interface to interact with a variety of LLMs like GPT-3 by OpenAI, BERT by Google, RoBERTa Facebook AI, T5 by Google, CTRL by Salesforce Research, Megatron-Turing by NVIDIA etc.

Chat Models

Chat Models are a variation of LLMs. These models use LLMs under the hood but they expose a chat-like interface wherein they take input text & return output text. They specialise in chatting with a user.

Text Embedding Models

Embeddings create a vector representation of a piece of text. They are useful as embeddings help us visualise the text in vector space & do things like semantic search. These models take text as input and return a list of numbers, the embedding of the text

The Embedding class is a class designed for interfacing with embeddings. Langchain provides a standard interface for all types of embedding models by the likes of OpenAI, Cohere, etc.

Example

We’ll use the OpenAI wrapper in LangChain to generate text from OpenAI’s “curie” model.

from langchain.llms import OpenAI

import os

os.environ['OPENAI_API_KEY'] = '<your-openai-api-key>'

llm = OpenAI(model_name="text-curie-001", temperature=0.5, n=1, max_tokens=100)

llm("Tell me a something about the universe.")

Code language: Python (python)Output from LLM

'\n\nThere are an estimated 100 billion galaxies in the observable universe.'Code language: Python (python)Creating Prompts in LangChain

Prompting is the new way of programming NLP models. Prompts are inputs provided to LLMs to get desired output from the model. In other words, a prompt is a piece of text that is used to guide the model’s output. Prompts can be used to perform a variety of tasks, such as text classification, text generation, and question answering.

Creating good prompts is hard. Asking the same thing in a different way can lead to a different result that is more or less accurate. The prompt can also change depending on the use case you are facing. Langchain provides a way to create prompts & reuse them again. This is possible due to Prompt Templates. As the name implies, prompt templates are templates that we can reuse to ask things from our model. The template may contain variables that the user can change to adapt the prompt to a particular use case.

Langchain provides

- Prompts: A standard interface for using prompts

- Prompt Templates: A standard interface for building & using prompt templates

- Example Selectors: methods for inserting examples into the prompt for the language model to follow

- Output Parsers: methods for inserting instructions into the prompt as the format in which the language model should output information, as well as methods for then parsing that string output into a format

Example

Let’s create a Prompt Template & reuse it in 2 different interactions with an LLM.

First, pass the OpenAI API key

import os

os.environ['OPENAI_API_KEY'] = '<your-openai-api-key>'

Code language: JavaScript (javascript)Next, make the required imports from langchain

from langchain.llms import OpenAI

from langchain import PromptTemplate

Code language: JavaScript (javascript)Instantiate the LLM model we want to use

llm = OpenAI(model='text-curie-001', temperature=0.5, n=1, max_tokens=100)Code language: JavaScript (javascript)Make a Prompt Template with variables in curly braces

template = '''I want you to act as a {profession} professional.

I will provide you with a query regarding your profession.

Query: {query}

Answer the query in a way that is most suitable for your profession.

'''

prompt = PromptTemplate.from_template(template)

Code language: PHP (php)Check input variables for prompt generated

Prompt.input_variablesCode language: CSS (css)Output

['profession', 'query']Code language: JSON / JSON with Comments (json)Pass in values for input variables & see the prompt get formatted as required.

prompt.format(profession='doctor', query='What is the best way to treat a patient with a broken leg?')Code language: JavaScript (javascript)Output

'I want you to act as a doctor professional.\nI will provide you with a query regarding your profession.\n\nQuery: What is the best way to treat a patient with a broken leg?\n\nAnswer the query in a way that is most suitable for your profession.\n'Code language: JavaScript (javascript)Use the prompt to get a response from Model

llm(prompt.format(profession='doctor', query='What is the best way to treat a patient with a broken leg?'))Code language: JavaScript (javascript)Output

'\nThe best way to treat a patient with a broken leg is to immobilise the leg with a cast or splint. This will prevent the leg from moving and help to heal the fracture.'Code language: JavaScript (javascript)Reusing the prompt with different values

llm(prompt.format(profession='lawyer', query='What is the process to get a divorce in US?'))Code language: JavaScript (javascript)Output

'\nThere is no one-size-fits-all answer to this question, as the process to get a divorce in the United States will vary depending on the specific state in which the divorce is being sought. However, generally speaking, a couple will first need to file a petition for divorce with the appropriate state court. After the petition has been filed, the court will hold a hearing to determine whether or not the couple is actually divorced. If the court finds that the couple is divorced, it will'Code language: JavaScript (javascript)What are Chains in LangChain?

Solving complex problems involves more than just querying our LLM. We may want to do things like querying the LLM based on previous queries or accessing a search engine midway, etc. These kinds of solutions require LLM to be paired with components like agents, chaining LLM calls like a pipeline, etc. This can be achieved with the help of chains in Langchain.

Chains are the key building block of Langchain. A simple chain consists of a promptTemplate paired with our LLM. This is LLMChain in Langchain. We can build many complex chains and even chain these chains sequentially to solve more complex problems. For an analogy, we could build a pipeline-styled solution involving different chains performing different tasks.

Example

Create a simple LLMChain to interact with OpenAI’s curie model

First, pass the OpenAI API key

import os

os.environ['OPENAI_API_KEY'] = '<your-openai-api-key>'Code language: JavaScript (javascript)Next, make the required imports from langchain

from langchain.llms import OpenAI

from langchain import PromptTemplate

from langchain.chains import LLMChainCode language: JavaScript (javascript)Instantiate the LLM model we want to use

llm = OpenAI(model='text-curie-001', temperature=0.5, n=1, max_tokens=100)Code language: JavaScript (javascript)Make a Prompt Template for the chain

template = "Tell me a {adjective} joke about {content}."

prompt = PromptTemplate.from_template(template)Code language: JavaScript (javascript)Make a chain with llm & prompt

chain = LLMChain(llm=llm, prompt=prompt)Run the chain & pass values to input variables

chain.run({

'adjective': 'funny',

'content': 'engineering'

})

Code language: JavaScript (javascript)Output

'\n\nWhy did the engineer cross the road?\n\nTo get to the other engineering department!'Code language: JavaScript (javascript)Example 2

Let’s create a sequential chain consisting of 3 three LLM chains with different tasks.

- The first chain will generate some text from our LLM on a user-specified topic in the user’s choice of language.

- The second chain will translate this text into English

- The third chain will give a review of the writing style of a text.

First, pass the OpenAI API key

import os

os.environ['OPENAI_API_KEY'] = '<your-openai-api-key>'

Code language: JavaScript (javascript)Next, make the required imports from langchain

from langchain.llms import OpenAI

from langchain import PromptTemplatefrom langchain.chains import SequentialChain

from langchain.chains import LLMChain

Code language: JavaScript (javascript)Make our first chain for generating text in the language of choice

llm = OpenAI(model='text-davinci-003', temperature=0.8)prompt = PromptTemplate.from_template("""Write a short paragraph on the {topic} in language: {language}.""")chain_one = LLMChain(llm=llm, prompt=prompt, output_key="paragraph")Code language: JavaScript (javascript)Make our second chain for translating generated text in English

llm = OpenAI(model='text-davinci-003', temperature=0)prompt = PromptTemplate.from_template("""Act as a language expert. Given a short paragraph identify its language & translate it to English.Paragraph: {paragraph}Translate the paragraph into English""")chain_two= LLMChain(llm=llm,prompt=prompt,output_key="english_paragraph")Code language: JavaScript (javascript)Make our third chain for reviewing translated text

llm = OpenAI(model='text-curie-001', temperature=0.5)prompt = PromptTemplate.from_template("""Act as an English professor.Review the writing style for a given English paragraph written by a student. Paragraph: {english_paragraph}Provide your review for above paragraph.""")chain_two= LLMChain(llm=llm,prompt=prompt,output_key="review")Code language: JavaScript (javascript)Make a final combined chain consisting of all three chains

combined_chain = SequentialChain( chains= [chain_one, chain_two, chain_three], input_variables=['topic', 'language'], output_variables=['paragraph', 'english_paragraph', 'review'], verbose=True )Code language: PHP (php)Run the chain & pass values to input variables

combined_chain({ 'topic': 'Napoleon Bonaparte', 'language': 'Spanish'})Code language: JavaScript (javascript)Output

> Entering new SequentialChain chain...

> Finished chain.{'topic': 'Napoleon Bonaparte', 'language': 'Spanish', 'paragraph': '\n\nNapoleón Bonaparte fue un militar y estadista francés que revolucionó el concepto de la política y la guerra en el siglo XIX. Él fue el líder de la Revolución Francesa y del primer Imperio Francés. Logró numerosas victorias militares que aseguraron su éxito como gobernante. Durante su reinado, estableció una serie de leyes y una amplia red de caminos y universidades, entre otros logros.', 'english_paragraph': ':\n\nNapoleon Bonaparte was a French military and statesman who revolutionised the concept of politics and war in the 19th century. He was the leader of the French Revolution and the first French Empire. He achieved numerous military victories that ensured his success as a ruler. During his reign, he established a series of laws and a wide network of roads and universities, among other achievements.', 'review': '\n\nThe paragraph is well-written and flows smoothly. The author uses effective techniques such as parallelism and repetition to create a strong and coherent argument. The vocabulary is accurate and the grammar is correct.'}

Code language: JavaScript (javascript)Maintain context with Memory

By default Chat Models & Agents are stateless i.e. they do not maintain any context between subsequent calls made to them. We may want these models to somehow persist content b/w calls for example, we would want a chatbot to maintain the context of conversation between messages. Based on our use-case we may want to persist state short term or long term. We might require partial context instead of full context.

Langchain’s Memory module provides solutions for many different use cases. Langchain provides many helper utilities to manage & manipulate previous messages. It also provides ways to incorporate these components into chains for complex LLM applications.

Different Types of Memory in Langchain

- Conversation Buffer Memory: Store messages and then extracts the messages in a variable.

- Conversation Buffer Window Memory: Store only the last K interactions.

- Entity Memory: Stores information about specific entities.

- Conversation Knowledge Graph Memory: Stores interactions as a knowledge graph

- Conversation Summary Memory: Stores summary of interactions instead of complete interactions

- Conversation Summary Buffer Memory: Stores summary of last K interactions

- Conversation Token Buffer Memory: Stores last interactions for specified token length

- Vector Store-Backed Memory: Stores interactions in vector stores

Example

Interacting with OpenAI’s curie model while preserving old chat history using a conversational chain

First, pass the OpenAI API key

import os

os.environ['OPENAI_API_KEY'] = '<your-openai-api-key>'

Code language: JavaScript (javascript)Next, make the required imports from langchain

from langchain.llms import OpenAI

from langchain.chains import ConversationChain

from langchain.memory import ConversationBufferMemory

Code language: JavaScript (javascript)Instantiate the LLM model we want to use

llm = OpenAI(model='text-curie-001', temperature=0.5, n=1, max_tokens=100)Code language: JavaScript (javascript)Make a conversational chain to use memory

conversation = ConversationChain(

llm=llm,

verbose=True,

memory=ConversationBufferMemory()

)

Code language: PHP (php)Start the conversation

conversation.predict(input='Hello, how are you?')Code language: JavaScript (javascript)Output

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.

Current conversation:

Human: Hello, how are you?

AI:

> Finished chain.

Code language: JavaScript (javascript)" I'm doing well, thank you for asking. How about you?"Code language: JSON / JSON with Comments (json)Continue the conversation

conversation.predict(input='How was your day?')Code language: JavaScript (javascript)Output – The previous conversation is also being passed in the prompt

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.

Current conversation:

Human: Hello, how are you?

AI: I'm doing well, thank you for asking. How about you?

Human: How was your day?

AI:

> Finished chain.Code language: PHP (php)'\n\nI had a good day. I met a lot of new people and learned a lot.'Code language: JavaScript (javascript)Ask something from the previous reply

conversation.predict(input='Tell me any one from those')Code language: JavaScript (javascript)Output – The previous conversation is passed in prompt & the Model is able to understand the question about the previous reply.

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.

Current conversation:

Human: Hello, how are you?

AI: I'm doing well, thank you for asking. How about you?

Human: How was your day?

AI:

I had a good day. I met a lot of new people and learned a lot.

Human: Tell me any one from those

AI:

> Finished chain.Code language: PHP (php)'\n\nThere was a woman I met who is a doctor. She was very informative and helpful.'Code language: JavaScript (javascript)More continued conversation

conversation.predict(input='What was her name?')Code language: JavaScript (javascript)Output – The previous conversation is passed in the prompt

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.

Current conversation:

Human: Hello, how are you?

AI: I'm doing well, thank you for asking. How about you?

Human: How was your day?

AI:

I had a good day. I met a lot of new people and learned a lot.

Human: Tell me any one from those

AI:

There was a woman I met who is a doctor. She was very informative and helpful.

Human: What was her name?

AI:

> Finished chain.Code language: PHP (php)" \n\nI'm sorry, I don't remember her name."Code language: JSON / JSON with Comments (json)What Indexes are provided in LangChain?

In current times data is said to be the new oil. Most of the companies have various data which they might depend on for making certain decisions. Any LLM-based application built to manipulate or analyse this data would need an interface for our LLM to interact with our data. The indexes module provides multiple ways for us to enable our LLMs to interact with external data.

Indexes refer to ways to structure documents so that LLMs can best interact with them. LangChain provides multiple Indexes that we can use.

- Document Loaders: Document Loaders provide methods to load data from different forms of documents(for example

csv_loader,json_loaader, etc). - Text Splitters: Text Splitters help in splitting a large document into smaller documents.

- Vectorstores: Vectorstores provide fast ways to find relevant documents by embeddings as they store documents and associated embeddings.

- Retrievers: Retrievers are used to fetch documents that provide answers to queries asked by users.

Example

Indexing a document and making queries to content within it

First, pass the OpenAI API key

import os

os.environ['OPENAI_API_KEY'] = '<your-openai-api-key>'Code language: JavaScript (javascript)Next, make the required imports from langchain

from langchain.document_loaders import TextLoader

from langchain.indexes import VectorstoreIndexCreatorCode language: JavaScript (javascript)Download a text document from the web.

import requests

url = "https://raw.githubusercontent.com/hwchase17/langchain/master/docs/modules/paul_graham_essay.txt"

response = requests.get(url)

data = response.text

with open("paul_graham_essay.txt", "w") as text_file:

text_file.write(data)

Code language: JavaScript (javascript)Load the document with the TextLoader.

loader = TextLoader('paul_graham_essay.txt', encoding='utf8')Code language: JavaScript (javascript)Instantiate a Vectorstore retriever and pass to it our text loader.

index = VectorstoreIndexCreator().from_loaders([loader])Now, ask questions about the data.

query = "What does Paul graham say in his essay?"

index.query(query)Code language: JavaScript (javascript)Output

' Paul Graham says that he figured out what to work on when he started publishing essays online. He also says that he knew that online essays would be a marginal medium at first, but he found that encouraging instead of discouraging.'Code language: JavaScript (javascript)Ask questions along with sources of response received

index.query_with_sources(query)Code language: CSS (css)Output

{'question': 'What does Paul graham say in his essay?',

'answer': ' In his essay, Paul Graham talks about how he had to ban himself from writing essays while he was working on Bel, how he was met with angry responses when he wrote about Lisp being better than other languages, how he was envious of his friend Robert Morris for finding a spectacular way to get out of grad school, how he was able to write his dissertation in 5 weeks, and how he figured out what to work on when he started publishing essays online.\n',

'sources': 'paul_graham_essay.txt'}Code language: JavaScript (javascript)What are Agents in LangChain?

Based on user inputs we might desire our LLM to perform different tasks. To achieve this we can use agents which follow a process of deciding to take an action, performing the specific action followed by observing the outcomes of the action & repeating this until desired objective is achieved. To enable agents to do this we can have the model use various tools like Wikipedia, python REPL, etc.

Langchain has 2 types of agents namely Action Agents & Plan-and-Execute Agents. Action agents are more suitable for small tasks whereas Plan-and-Execute Agents are more suited for complex or long-running tasks. Langchain also provides many tools out-of-the-box for these agents to use & users can also build custom tools if required.

Action Agents are agents that decide the action to be taken & then execute the action repeating this until they achieve their objective whereas Plan-and-Execute Agents first create a plan of actions to be taken & then execute the actions in a plan made one at a time.

Example

Let’s create a ReAct Agent which queries Wikipedia pages to answer the questions asked and see its thought process.

A bit of overview on ReAct.

Chain-of-thought prompting is a method of prompting to make our LLM reason for the answer it provides. This enables LLM’s capabilities to go over a problem step-by-step while reasoning at each step. In other words, we ask the LLM to solve a complex problem by breaking it into smaller problems, the LLM does the task of breaking the complex problem into smaller problems on its own while providing reasons to do so. This is Chain-of-thought prompting.

ReAct (can be thought of as Reason+Act) builds upon this & enables the LLM to combine reasoning with actions. It allows LLMs to perform actions based on internal/external reasoning of LLM, these actions could be using a search engine, using a calculator, using Wikipedia, etc.

In our example, we will build a ReAct agent which can query Wikipedia pages to answer internal questions while it reasons to achieve a final answer for the initial query.

First, pass the OpenAI API key

import os

os.environ['OPENAI_API_KEY'] = '<your-openai-api-key>'

Code language: JavaScript (javascript)Next, make the required imports from langchain

from langchain.llms import OpenAI

from langchain import Wikipedia

from langchain.agents import Tool, initialize_agent, AgentType

from langchain.agents.react.base import DocstoreExplorer

Code language: JavaScript (javascript)Instantiate the Model & also initialise the ReAct Agent

docstore=DocstoreExplorer(Wikipedia())

tools = [

Tool(

name="Search",

func=docstore.search,

description="useful for when you need to ask with search"

),

Tool(

name="Lookup",

func=docstore.lookup,

description="useful for when you need to ask with lookup"

)

]

llm = OpenAI(model='text-davinci-002', temperature=0)

react = initialize_agent(tools, llm, agent=AgentType.REACT_DOCSTORE, verbose=True)

Code language: PHP (php)Make a query to the agent

question = "Who is the first president of the United States? Which year did he become the president?"

react.run(question)Code language: JavaScript (javascript)Output – The Agent’s thought process can be seen in the output below

> Entering new AgentExecutor chain...

Thought: I need to search Edison and find when he invented the bulb.

Action: Search[Edison]

Observation: Thomas Alva Edison (February 11, 1847 - October 18, 1931) was an American inventor and businessman. He developed many devices in fields such as electric power generation, mass communication, sound recording, and motion pictures. These inventions, which include the phonograph, the motion picture camera, and early versions of the electric light bulb, have had a widespread impact on the modern industrialised world. He was one of the first inventors to apply the principles of organised science and teamwork to the process of invention, working with many researchers and employees. He established the first industrial research laboratory.Edison was raised in the American Midwest. Early in his career he worked as a telegraph operator, which inspired some of his earliest inventions. In 1876, he established his first laboratory facility in Menlo Park, New Jersey, where many of his early inventions were developed. He later established a botanical laboratory in Fort Myers, Florida, in collaboration with businessmen Henry Ford and Harvey S. Firestone, and a laboratory in West Orange, New Jersey, that featured the world's first film studio, the Black Maria. With 1,093 US patents in his name, as well as patents in other countries, Edison is regarded as the most prolific inventor in American history. Edison married twice and fathered six children. He died in 1931 due to complications from diabetes.

Thought: Edison invented the light bulb in 1876.

Action: Finish[1876]

> Finished chain.Code language: PHP (php)'1876'Code language: JavaScript (javascript)Conclusion

In conclusion, Langchain is an open-source framework that empowers developers to leverage the capabilities of language models (LLMs) in their applications. With its modular approach and easy integration with various LLMs, Langchain provides the tools and functionalities necessary to tackle complex problems and create innovative solutions. By combining LLMs, prompt templates, memory preservation, external data interactions, and agent capabilities, developers can build powerful applications that harness the advancements in generative AI and natural language processing. Langchain is a valuable resource for unlocking the potential of LLMs and driving the next wave of conversational agents and virtual assistants.

If you are excited about LangChain, Check out our post on how to Build a Revolutionary 100x Digital Customer Service Experience with Generative AI.

1 Comment